Abstration Recognition

In an age where computers can now tell us whether or not a photo contains a hot dog, it can be easy to extrapolate that very soon, computers will be able to replicate human creativity. This is a real discussion that I often have with engineering friends—I, of course, argue that that is impossible, while said engineers argue that it truly is not far off.

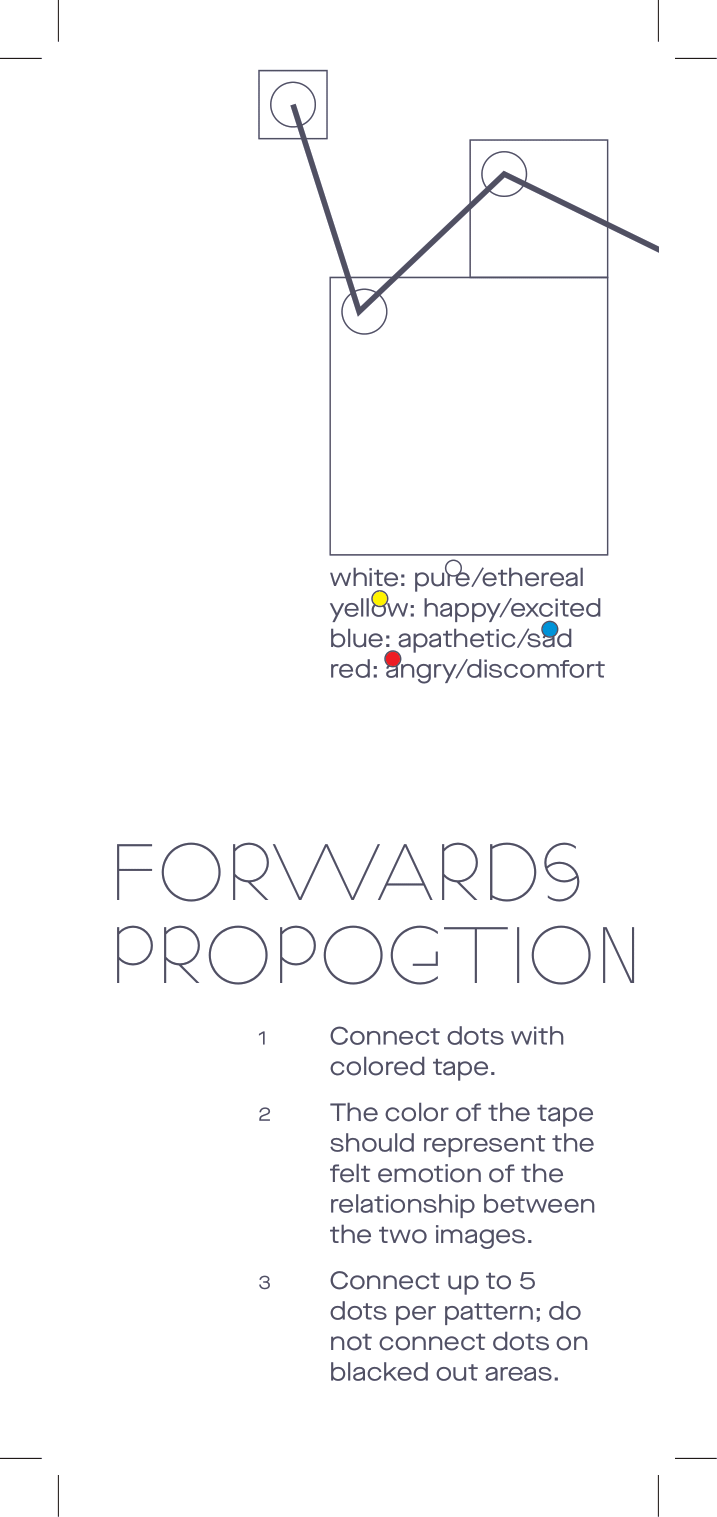

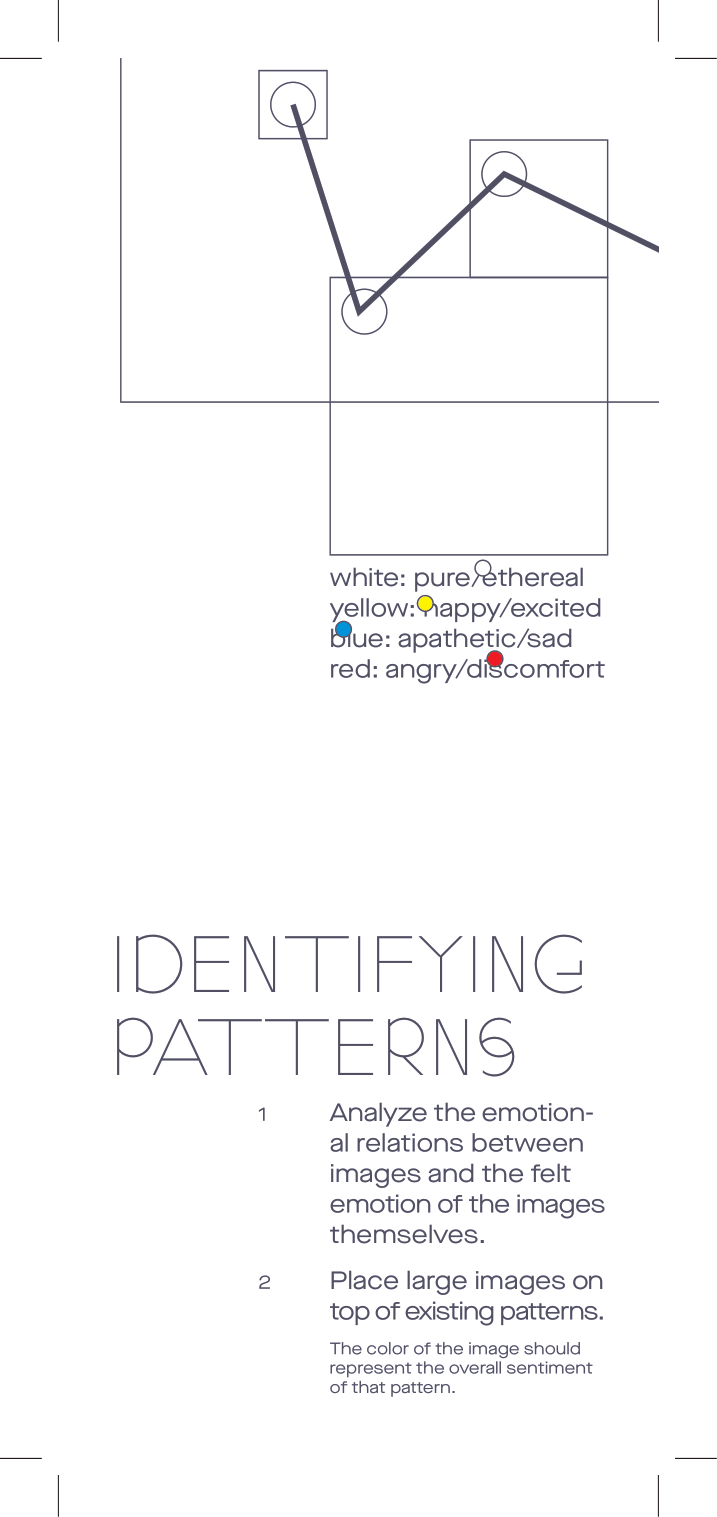

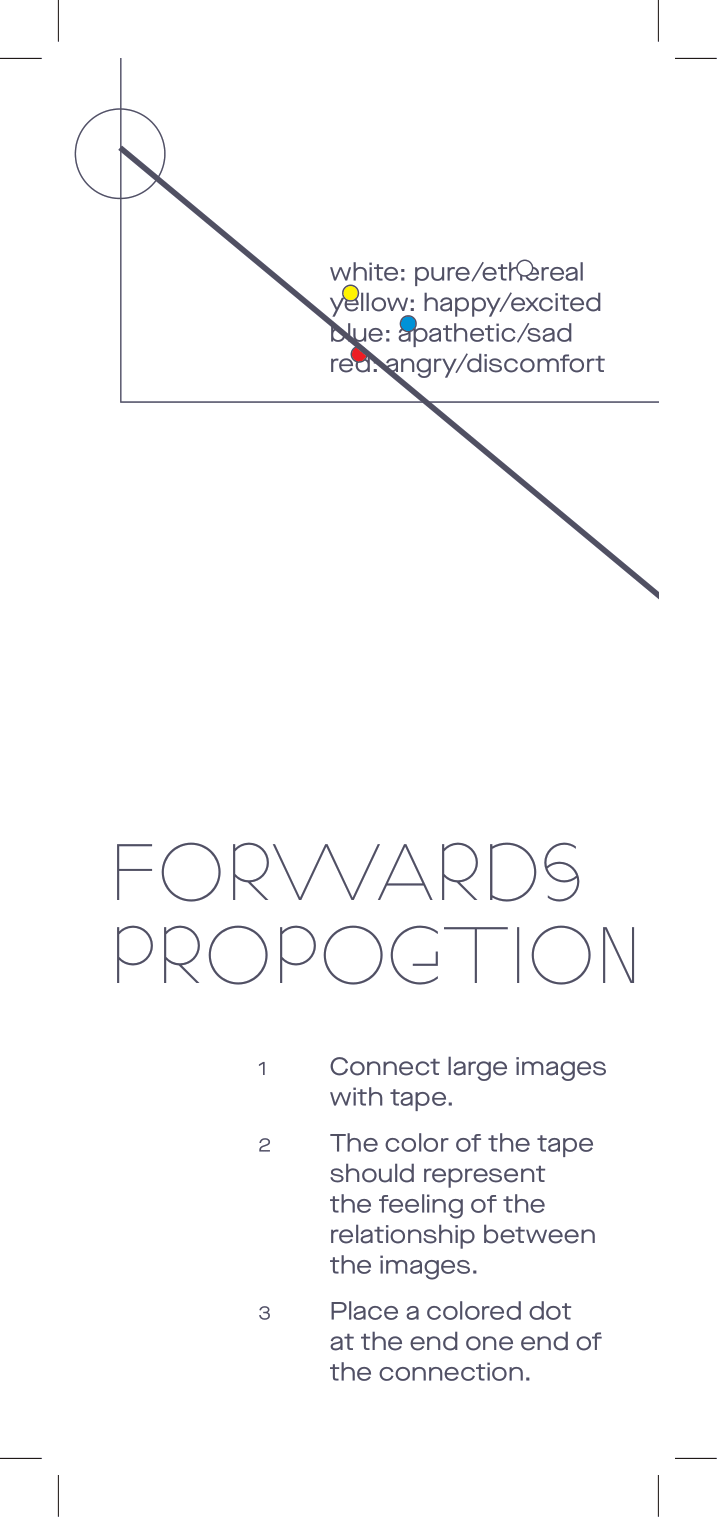

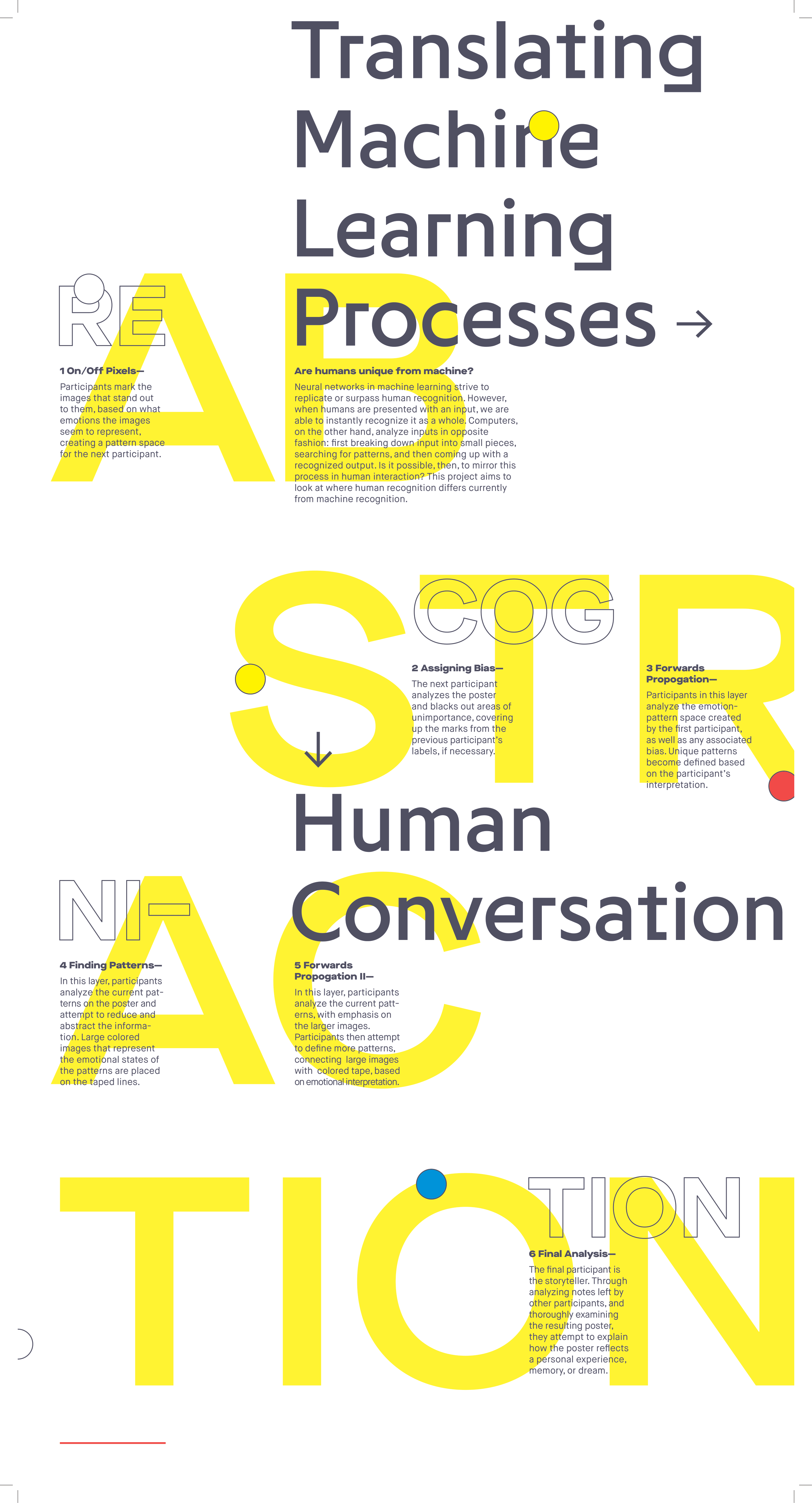

Participants are each given an instruction that is mapped to one step in the neural network. However, instead of literally asking participants to recreate what is happening, I extrapolated the neural networks to a delineated “process” of human conversation. With posters made from image recognition/machine learning data sets, I asked participants to seek out patterns, emotions, feelings, memories that the images triggered, with each participant thus passing their perceptions and perspective onto the next person in the “network”.

This project was advised by Kyuha Shim.

Abstraction Recognition is an interactive participatory experience inspired by machine learning and image recognition, as well as the work of Luna Maurer and Conditional Design. The basic image recognition process (just one of the many possible applications of neural networks) relies on breaking down input images into pixels, and slowly finding recognized patterns from clusters of pixels (This is an extremely reductive description of how neural networks work. It is hard to describe even in a few sentences; however, for this project, I spent some time learning about neural networks, and found these videos concise and digestible, even for a non-mathematician like me.)

This project sets out to compare computer recognition to human capability—a pixel is to a unit of the computer as a human experience or memory is a unit of the brain. Machine learning has enabled DeepFake, contextual image and text generation, audio that is in-differentiable from human voice. The heart of human creativity lies in being able to recognize patterns, abstract those patterns, and generate new things. Computers can now do that too. If we humans are unable to tell the difference from human made and computer generated, at a certain point, does it matter the origin?

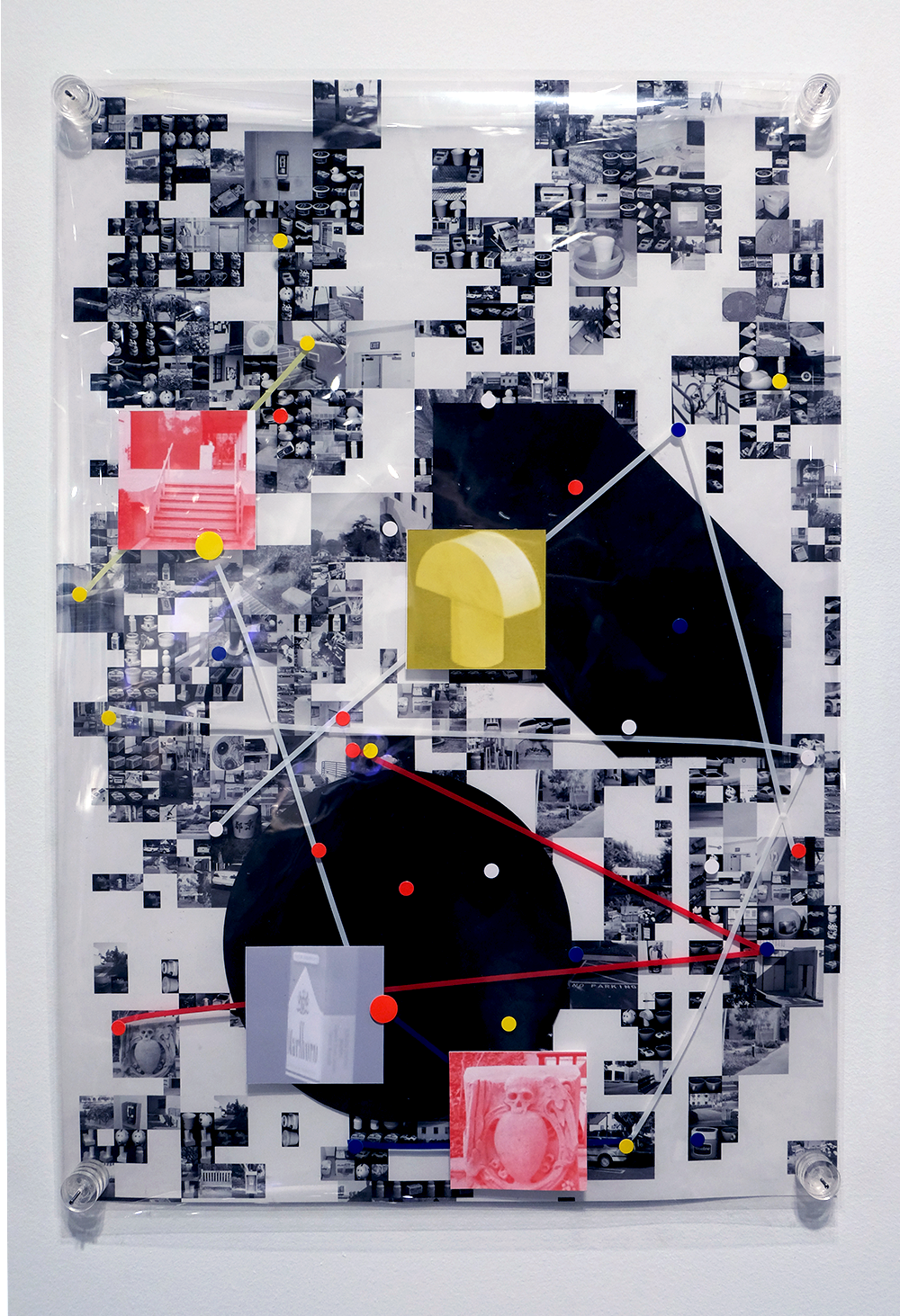

Final Results

Installation